Softdel signs TECH VEDA for corporate training on Embedded Linux

Softdel signs TECH VEDA for delivering corporate training program on Embedded Linux. Softdel becomes our 49th client.

As the world races into the Edge AI era, the way we build and deploy smart devices is changing rapidly. From intelligent cameras and drones to next-generation industrial automation, modern systems demand not only smarter hardware, but also smarter software. For engineers and enthusiasts stepping into this space, there’s one question that comes up again and again:

“Should I dive straight into Linux device drivers, or start with kernel programming?”

Let’s break down why learning Linux kernel programming first is not just smart—but essential for anyone serious about building robust, efficient, and future-ready Edge AI solutions.

1. Edge AI: More Than Just Hardware

Edge AI means running powerful machine learning, vision, and data-processing tasks directly on embedded devices—not in the cloud. This brings unique challenges:

The Linux kernel is the engine room for all of this. If you want your drivers or applications to work efficiently, you need to understand how the kernel manages memory, scheduling, synchronization, and communication beneath the surface.

2. Drivers Stand on the Shoulders of the Kernel

A Linux device driver isn’t magic—it’s a bridge between hardware and the kernel. Writing a driver without knowing how the kernel works is like building a house without understanding its foundation.

Key kernel concepts every Edge AI developer needs:

Mastering these before driver code means your drivers will be more stable, faster, and easier to debug.

3. Edge AI Makes Everything More Intense

In Edge AI, the pressure on your software is higher:

Kernel programming teaches you the principles and tools to diagnose, debug, and optimize these challenges. You’ll know how to use ftrace, perf, eBPF, and other tools to see what’s really happening inside your device.

4. Stand Out in the Job Market

Companies building Edge AI, robotics, and next-gen IoT are looking for engineers who “get” the kernel—not just copy-paste driver code.

Why?

Because the best engineers don’t just make things work—they make them work well. If you can diagnose kernel memory issues, optimize latency, or trace mysterious bugs, you’re the one who gets the offer.

5. Your Learning Path: Foundation First, Drivers Next

Think of Linux kernel programming as the “language” of embedded and Edge AI systems. Once you’re fluent, learning to write device drivers is like picking up a new dialect—it’s easier, faster, and much less frustrating.

Recommended roadmap:

Ready to Future-Proof Your Skills?

If you’re serious about building the next generation of Edge AI products, start with the Linux kernel. It’s the rock-solid foundation your entire career will stand on.

Explore our Linux Kernel Programming Foundation Course and join a community of engineers building the future—one line of kernel code at a time.

Softdel signs TECH VEDA for delivering corporate training program on Embedded Linux. Softdel becomes our 49th client.

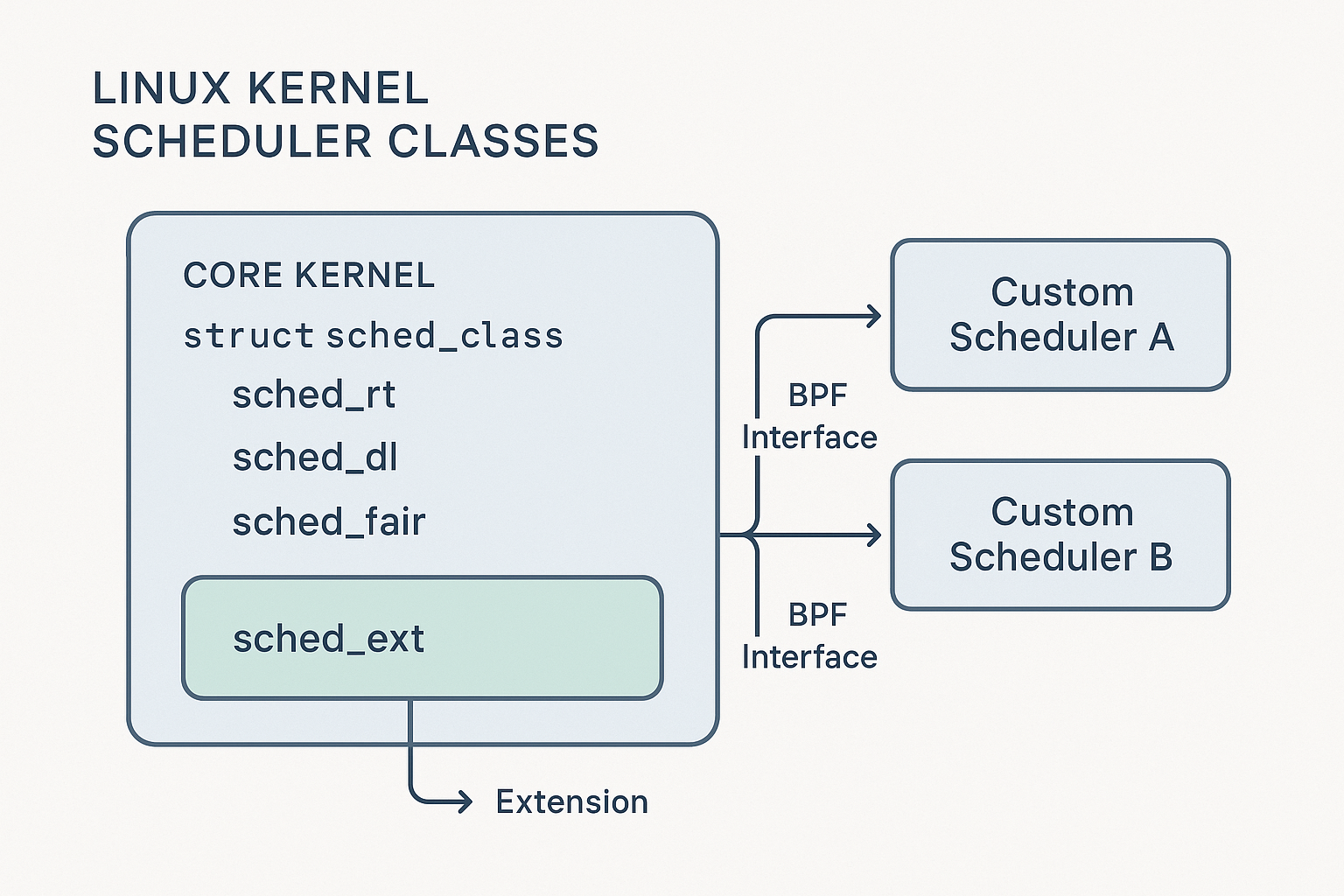

This article provides a deep dive into the major scheduler classes, their unique design goals, and the management utilities powering the system.

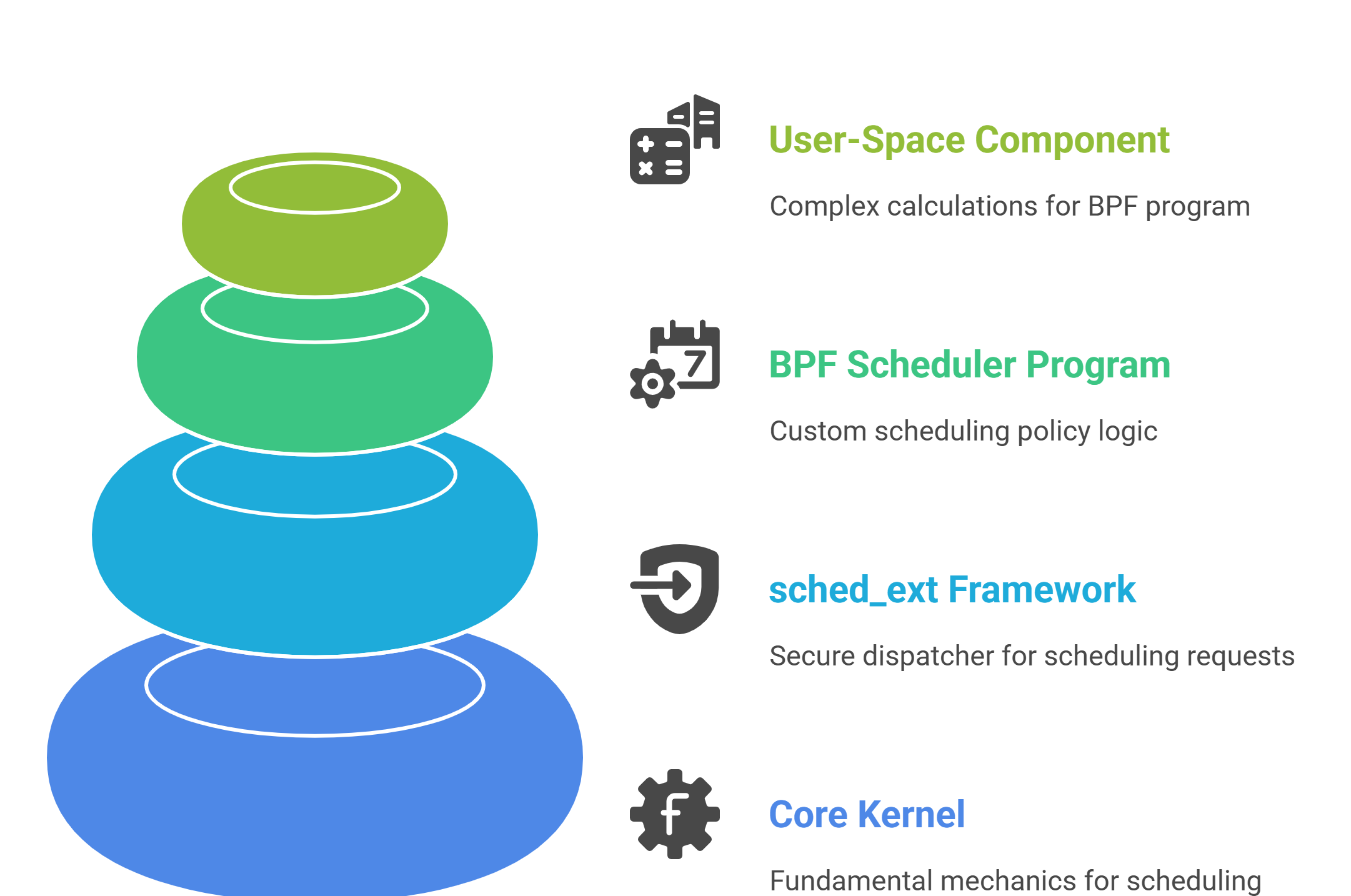

sched_ext is not a scheduler; it’s a framework that securely connects custom BPF programs to the core kernel. Its architecture consists of four distinct layers that separate responsibilities cleanly.

For decades, general-purpose schedulers like CFS and EEVDF, powered everything from phones to supercomputers. But with complex hardware and specialized software, the “one-size-fits-all” scheduling model began to crack. This tension set the stage for sched_ext.