Softdel signs TECH VEDA for corporate training on Embedded Linux

Softdel signs TECH VEDA for delivering corporate training program on Embedded Linux. Softdel becomes our 49th client.

The Linux kernel is the most versatile operating system kernel in the world, powering everything from tiny embedded sensors to the world’s largest supercomputers. This adaptability means that the process of “booting Linux” is not a single, uniform sequence. It’s a collection of highly specialized strategies, each tailored to the unique hardware and security constraints of its platform.

This series will explore these divergent paths, from the familiar PC to the specialized worlds of embedded systems, mobile devices, and mainframes. To begin, we must first establish a common language—a conceptual framework that applies to every boot process, regardless of the underlying architecture.

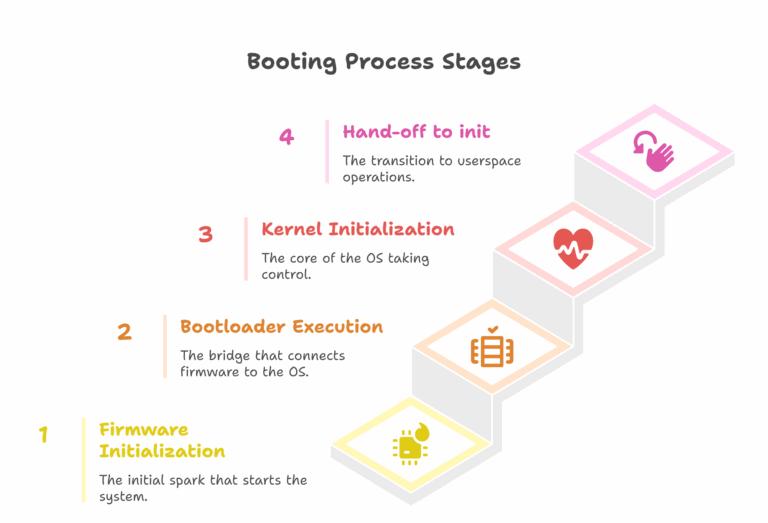

At its core, booting is a procedure that takes a system from inert hardware to a fully operational state. This happens across four fundamental stages, each building upon the last.

Stage 1: Firmware Initialization (The First Spark)

The moment a device is powered on, the CPU begins executing code from a hard-coded program stored in non-volatile memory like a ROM chip. This is the system firmware. Its first job is to perform a Power-On Self-Test (POST), initializing and verifying critical hardware like memory controllers. This stage solves the most basic problem: making the system’s main RAM usable for the larger programs that will follow. It concludes when the firmware identifies a bootable device and hands over control to the first piece of software it finds—the bootloader.

The bootloader is the crucial intermediary between the firmware and the operating system kernel. While firmware can initialize hardware, it typically doesn’t understand complex filesystems like ext4 or btrfs where the OS resides. The bootloader’s purpose is to bridge this gap. It contains just enough logic to navigate the filesystem, find the kernel image, load it into RAM, and pass it essential configuration data. This stage can be a single program, like GRUB2 on a PC, or a multi-stage chain, as is common in embedded systems.

Once loaded, the Linux kernel takes charge. It first decompresses itself into memory and begins initializing its own internal subsystems, like the process scheduler and memory management. It then uses its vast array of drivers to initialize all the system’s hardware.

However, the kernel faces its own bootstrapping dilemma. The final root filesystem might be on a device (like an encrypted disk or a network share) that requires special drivers to be mounted. To solve this, the bootloader also loads an initial RAM filesystem (initramfs). The kernel uses this initramfs as a temporary root, which contains the necessary drivers and tools to mount the real root filesystem.

After mounting the real root filesystem, the kernel’s final task is to execute the init program (typically /sbin/init), which is assigned Process ID 1 (PID 1). This marks the critical transition from kernel space to user space. On modern systems, this init process is almost always systemd. It is responsible for starting all the system services, daemons, and graphical interfaces that make up a fully functional Linux environment.

In the next installment, we will apply this universal framework to the platform most of us use every day: the modern PC and server, exploring the revolutionary shift to the UEFI paradigm.

Softdel signs TECH VEDA for delivering corporate training program on Embedded Linux. Softdel becomes our 49th client.

This article provides a deep dive into the major scheduler classes, their unique design goals, and the management utilities powering the system.

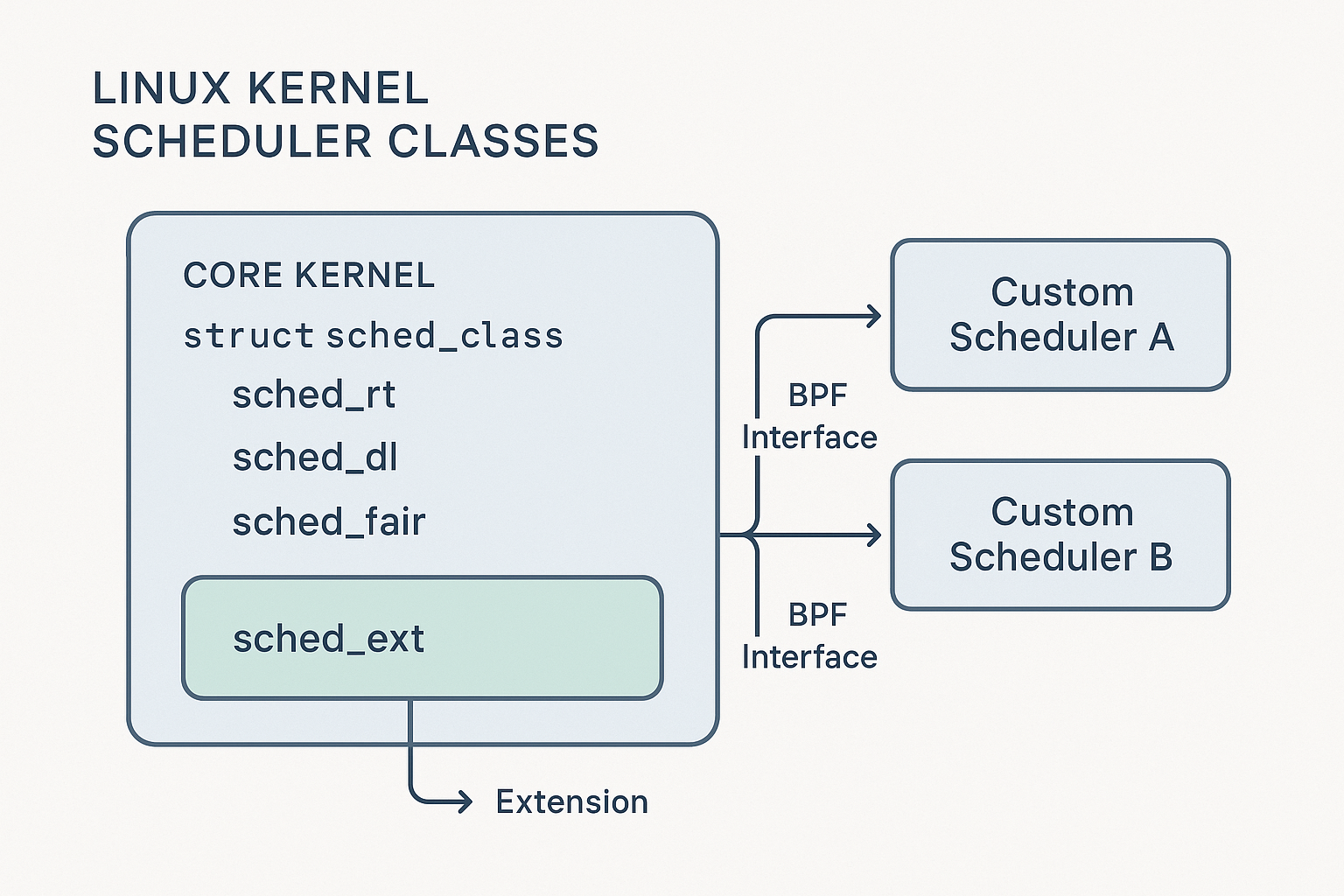

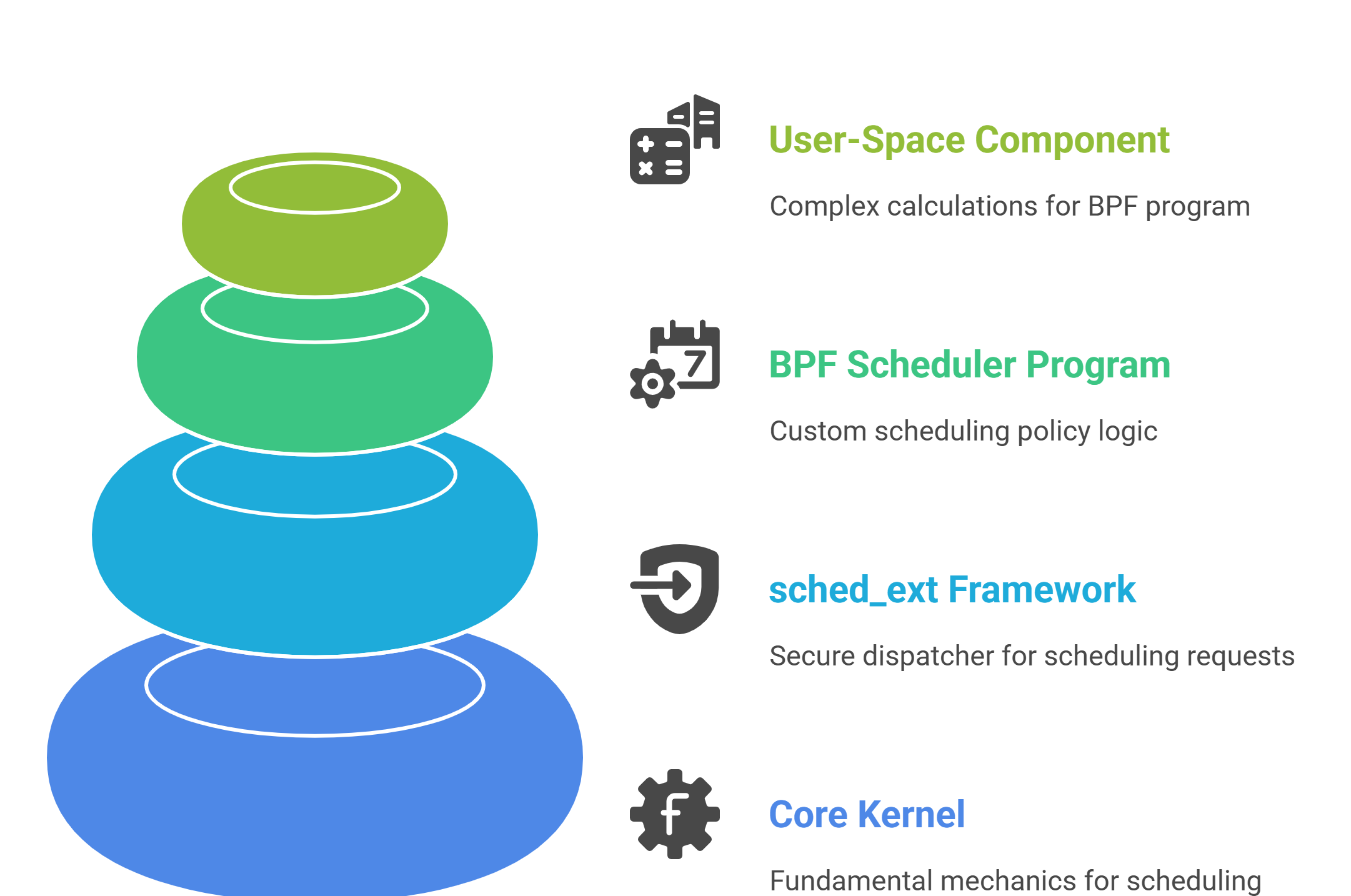

sched_ext is not a scheduler; it’s a framework that securely connects custom BPF programs to the core kernel. Its architecture consists of four distinct layers that separate responsibilities cleanly.

For decades, general-purpose schedulers like CFS and EEVDF, powered everything from phones to supercomputers. But with complex hardware and specialized software, the “one-size-fits-all” scheduling model began to crack. This tension set the stage for sched_ext.