Introduction

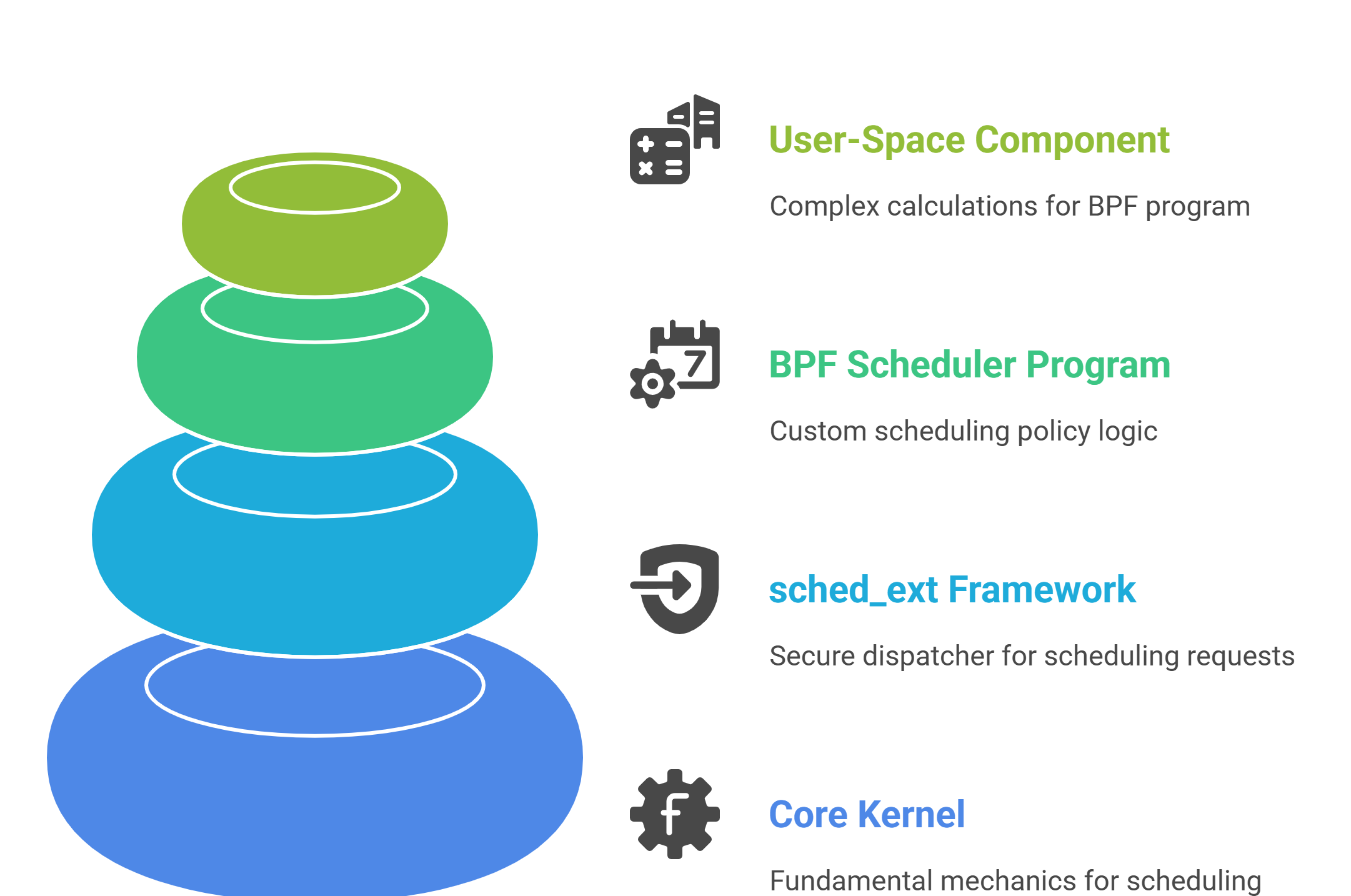

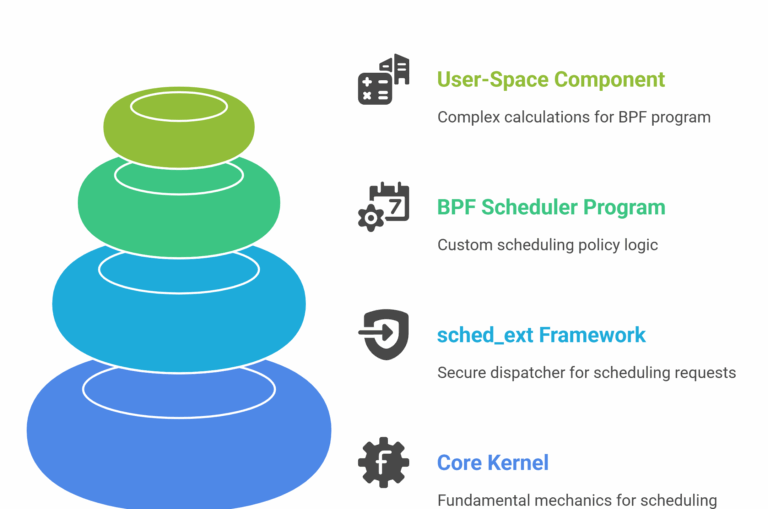

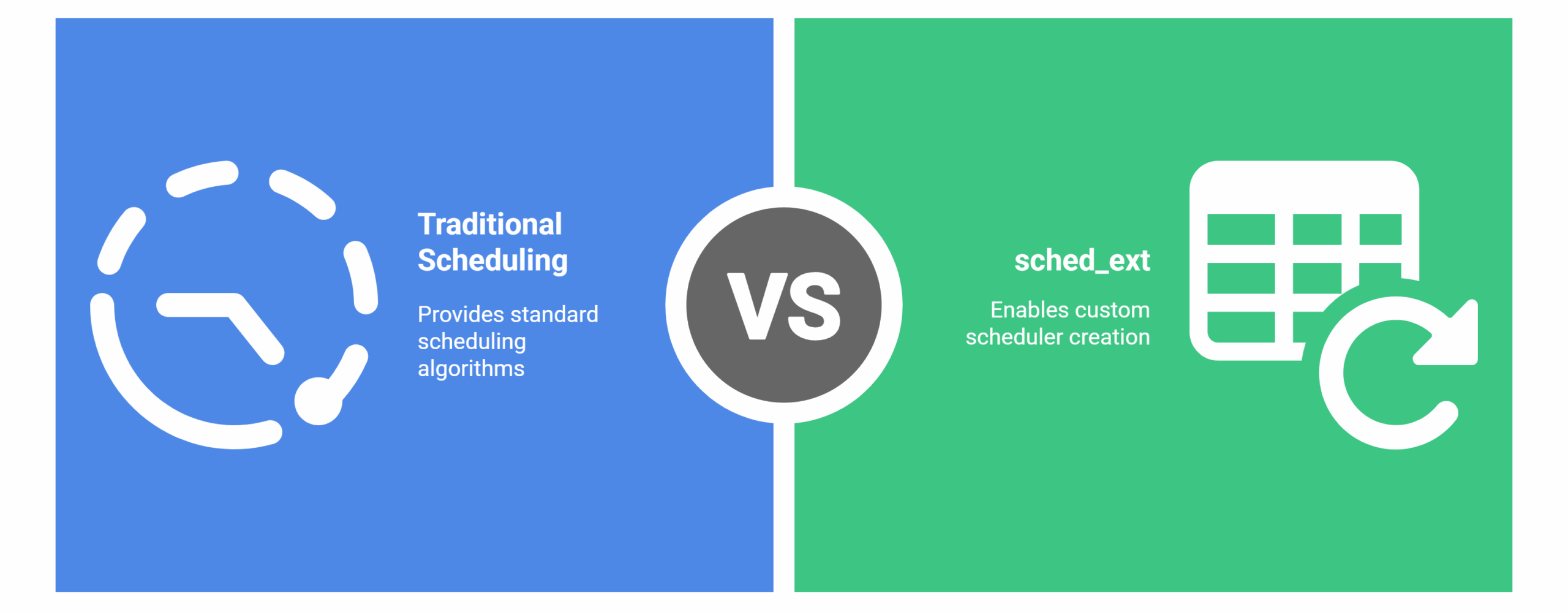

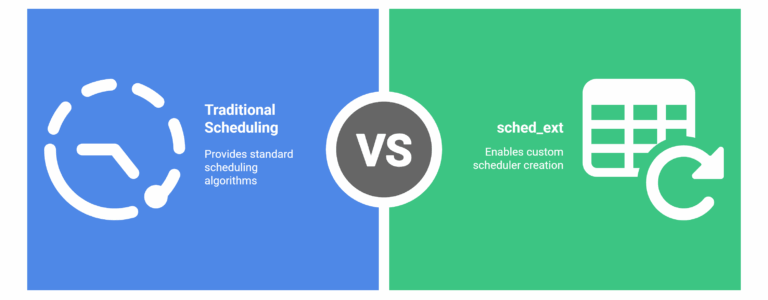

sched_ext is transforming Linux kernel scheduler development with its extensible, BPF-powered approach. Since its inception as an RFC, it has grown into a diverse ecosystem spanning custom schedulers, user-space tooling, and production-ready components. This article provides a deep dive into the major scheduler classes, their unique design goals, and the management utilities powering the system.

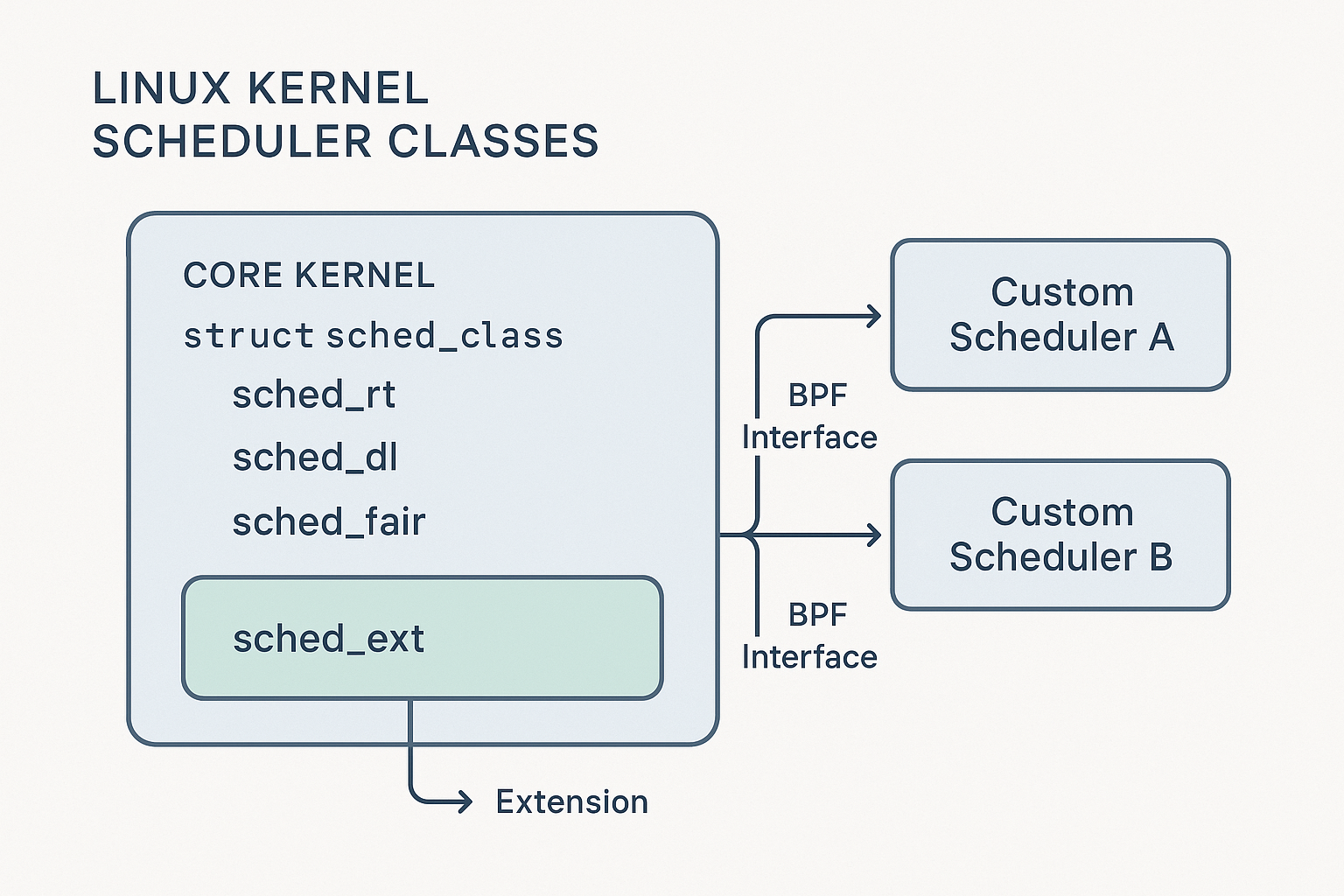

Here’s how sched_ext integrates with the kernel’s sched_class model, enabling pluggable, BPF-based scheduler modules:

Raghu Bharadwaj

Known for his unique ability to turn complex concepts into deep, practical insights. His thought-provoking writings challenge readers to look beyond the obvious, helping them not just understand technology but truly think differently about it.

His writing style encourages curiosity and helps readers discover fresh perspectives that stick with them long after reading

Categories of sched_ext Schedulers

The ecosystem features a rich variety of schedulers. They are grouped below by core use case and derived architectural principles:

Demonstrative and Foundational

- scx_simple

Minimal “hello world” scheduler. Implements FIFO and vtime; ideal for understanding the core extension points and eBPF API.

Use case: Education, demonstration. - scx_central

Makes all scheduling decisions on a single CPU, amortizing timer overhead on large systems.

Use case: Virtualization, experimentation on topology effects.

General-Purpose

- scx_rusty

Combines BPF event hooks and Rust code with round-robin logic inside L3 cache domains and user-space load balancing.

Use case: Production systems seeking switchable, tunable scheduling. - scx_p2dq

“Pick-two” load balancer—picks the least busy among two random CPUs, optimizing across locality and response time.

Use case: Systems needing balanced throughput and fairness.

Gaming & Low-Latency

- scx_lavd

Latency-Aware Virtual Deadline scheduler, built to sustain low frame time outliers for gaming and multimedia (e.g., 1% low FPS in Steam Deck scenarios).

Use case: Gaming, multimedia playback, graphics workloads.

- scx_bpfland

Blends vruntime accounting with interactivity boosts and NUMA/topology awareness.

Use case: Desktop, responsive UI environments. - scx_cosmos

Deadline-based scheduling for soft real-time applications—audio/video or XR workloads.

Use case: Soft RT, media servers.

Hybrid & Experimental

- scx_rustland

Moves significant logic into user space (Rust), with only a thin BPF shim in kernel.

Use case: Prototyping, rapid iteration.

- scx_tickless

Suppresses periodic scheduler ticks on select CPUs to minimize jitter, valuable for HPC or cloud latency determinism.

Use case: HPC, cloud workloads, benchmarking. - scx_flatcg

Flattens cgroup hierarchy, accelerating CPU controller response time in deeply nested workloads.

Use case: Complex containerized/multitenant environments.

Management Tools

To coordinate and automate the usage of schedulers, the following tools are central:

- scx_loader

- A persistent management daemon exposing a D-Bus API.

- Handles scheduling module lifecycles, configuration transitions, and integration with desktop/system managers.

- scxctl

- Command-line tool for enumerating, loading, or switching schedulers.

- Supports scripting and workflow automation.

- Predefined Modes

- Turnkey preset profiles—e.g., Gaming, Server, Power Saving—targeting different priority mixes and efficiency settings.

Summary

The sched_ext ecosystem represents a leap forward in kernel scheduling: it offers depth through its novel BPF-driven extensibility, breadth through its range of schedulers, and usability via standard tooling. Whether in research, gaming, or datacenter production, workload-specific scheduling has never been more practical or accessible.